Graph-Based Multi-Task Learning

For Fault Detection In Smart Grid

Timely detection of electrical faults is of paramount importance for efficient operation of the smart grid. To better equip the power grid operators to prevent grid-wide cascading failures, the detection of fault occurrence and its type must be accompanied by accurately locating the fault. In this work, we propose a multi-task learning architecture that encodes the graph structure of the distribution network through a shared graph neural network (GNN) to both classify and detect faults and their locations simultaneously. Deploying GNNs allows for representation learning of the grid structure which can later be used to optimize grid operation. The proposed model has been tested on the IEEE-123 distribution system. Numerical tests verify that the proposed algorithm outperforms existing approaches.

Power distribution grid have an inherent graph structure. Each node in the graph neural network can encode the voltage phasors and the graph structure can encode the structure of the distribution grid. Other machine learning models like SVM, Random Forest, etc and deep learning architectures like DNN, CNN or Transformer would fail to incorpoate the graph strucutre information. However, GNN by design works with a graph structure which makes it the ideal architecture to model power distribution grid.

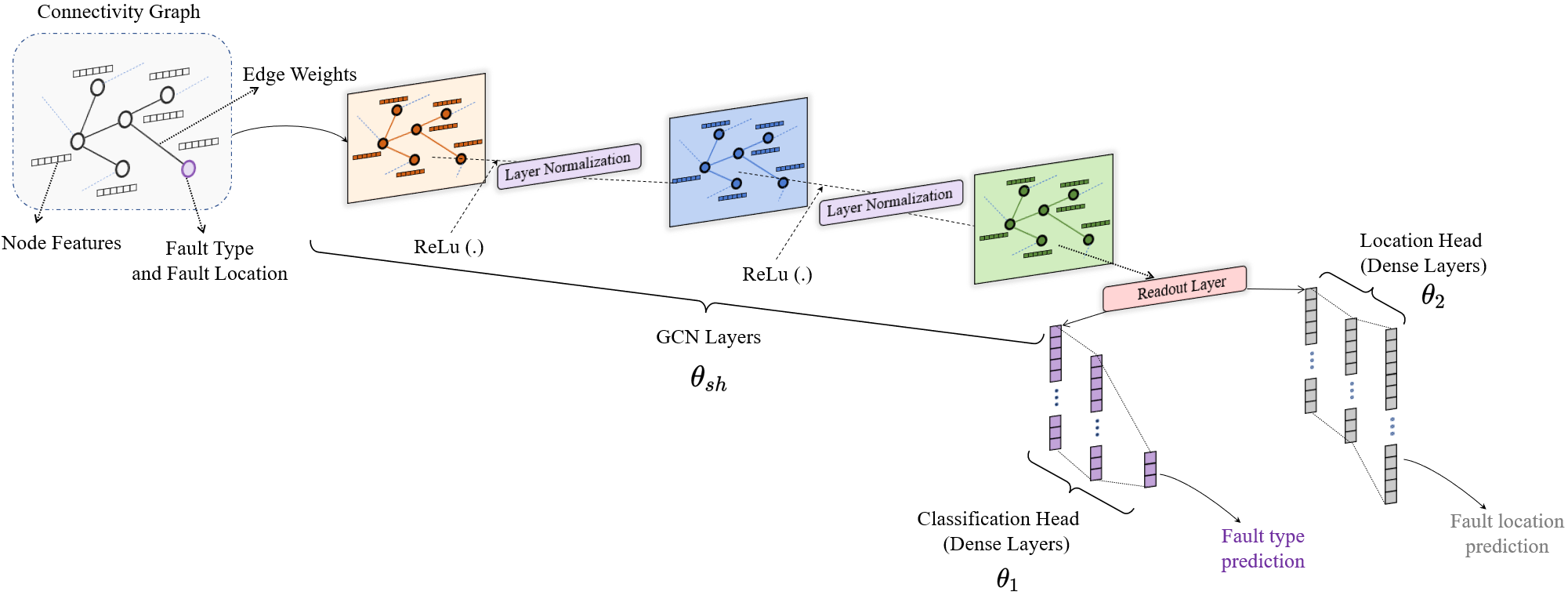

It is possible to use separate models to perform fault classification and fault localization. However, that comes with the drawback that fault localization and fault classification task is not sharing any information. In addition, it would unecessarily increase the number of parameters and time for inference. A multi-task approach allows for sharing information between tasks and makes the inference time faster.

If the proposed model is to be deployed in real-life, the voltage phasors encoded in the nodes of the GNN need to be collected through some data acquisition devices like phasor measurement units (PMUs). These devices are extremely costly as they require constant GPS synchronization. Because of this reason, it is not possible to collect data from all the nodes in the distribution grid. By allowing for sparsity, the performance metrics reflects the practical performance.

The GNN backbone consists of three GCN layers which is used to learn a representation of the input graph. The learned representations are then passed to the two separate dense layer, one working as the classification head and the other working as the localization head.

This work was published in MLSP 2023 . Use the following information to cite the paper.

Chanda, Dibaloke, and Nasim Yahya Soltani. "Graph-Based Multi-Task Learning For Fault Detection In Smart Grid." In 2023 IEEE 33rd International Workshop on Machine Learning for Signal Processing (MLSP), pp. 1-6. IEEE, 2023.

@inproceedings{chanda2023graph,

title={Graph-Based Multi-Task Learning For Fault Detection In Smart Grid},

author={Chanda, Dibaloke and Soltani, Nasim Yahya},

booktitle={2023 IEEE 33rd International Workshop on Machine Learning for Signal Processing (MLSP)},

pages={1--6},

year={2023},

organization={IEEE}

}